Artificial intelligence (AI) in healthcare

Artificial intelligence is fundamentally transforming healthcare—from faster diagnostics and robotic surgery to smart operations management. These innovations are improving the efficiency and quality of care, but they also bring entirely new legal challenges and risks. For companies with dozens to hundreds of employees, investors, and managers, it is crucial that these innovations become a competitive advantage rather than a source of chaos, wasted time, and million-dollar fines. Legal certainty is the cornerstone of successful AI implementation in healthcare.

ARROWS Law Firm has experience in helping doctors and healthcare facilities safely and legally implement artificial intelligence into their practices. As the “Innovative Law Firm of the Year 2024” in the artificial intelligence category, ARROWS understands the challenges companies face and is able to combine innovation with legal certainty.

(photo of ARROWS team)

In the field of artificial intelligence in healthcare, there is a marked difference between rapid technological progress and slower development of legal and regulatory frameworks. While AI applications are constantly expanding and improving, legislation is often described as “in its infancy” or lacking a “clear line of responsibility.”

In addition, Czech companies express concerns about their lack of preparedness for key regulations such as the EU AI Act. This mismatch between innovation and regulation creates an environment of uncertainty and increased risk of legal disputes and financial penalties for companies implementing AI. A proactive approach to legal advice in this dynamic environment is therefore becoming essential to ensure stability and long-term success.

What ARROWS law firm does most often for companies in the field of AI in healthcare:

ARROWS law firm solves complex legal challenges related to artificial intelligence in healthcare for its clients.

Comprehensive legal assistance in the implementation of AI systems

ARROWS lawyers provide comprehensive legal assistance in the implementation of AI in healthcare facilities. A legal framework for the use of AI tools is established from the outset to ensure that innovations meet all legislative requirements and patient safety standards. ARROWS helps assess whether the AI software used falls under the Medical Device Regulation (MDR) or other standards and obtains the necessary approvals and permits. Thanks to this support, clients can deploy AI solutions quickly and without fear of legal complications.

Need help with this topic?

Ensuring compliance with GDPR, MDR, and the new EU AI Act

ARROWS lawyers ensure that clients' AI systems and procedures comply with current healthcare regulations. They help set up processes to meet patient data protection requirements under the GDPR and ensure that technology complies with regulations such as the European Medical Device Regulation (MDR) and the Czech Health Services Act. With ARROWS, clients can always stay one step ahead of changing regulations, minimizing the risk of fines and legal disputes while strengthening the trust of patients and partners.

Setting responsibility for AI tool decisions and minimizing risks

ARROWS advises on how to properly set responsibility when using AI in treatment or operations. It helps set internal rules and contractual arrangements so that it is clear who is responsible for AI outputs and under what circumstances. Current European legislation emphasizes that liability for damage lies with the human being, not the machine, and new regulations (AI Act, AI Liability Directive) are being prepared to harmonize the rules on compensation for damage caused by AI.

ARROWS experts monitor these changes and ensure that clients have legal guidance on how to proceed in the event of AI errors so that their equipment is not vulnerable to claims from patients or regulatory authorities.

Contractual arrangements with technology suppliers for maximum protection of interests

ARROWS helps negotiate and prepare contracts with AI system suppliers to maximize the protection of clients' interests. A clear division of rights and obligations between the client and the AI solution supplier is established, including special provisions for the implementation and operation of AI tools. Issues of warranties and service support are addressed – who is responsible for any system errors, what are the claims for defective performance, and how are upgrades or maintenance handled.

It also focuses on data protection when transferring data to the technology provider and on software ownership or licensing. A well-drafted contract provides certainty that, in the event of problems with the AI product supplied, the client has solid legal support and the possibility of seeking redress.

(ARROWS AI team pictured)

Creation of internal guidelines and staff training for the safe and ethical use of AI

Staff training and clear internal rules are also key to the successful and safe use of AI technologies in healthcare. ARROWS will prepare training for clients' employees focused on the legal aspects of working with AI – from personal data protection and system failure procedures to ethical principles for using AI in patient care.

We will help create clear internal guidelines for the use of AI tools so that everyone knows how to work with AI outputs correctly and when human supervision is necessary. Thanks to trained staff and well-established processes, errors will be avoided, the safety of care will be increased, and regulatory authorities will see that the facility takes compliance seriously.

Legal audits and compliance checks of AI solutions

ARROWS identifies potential risks associated with the deployment of AI and performs legal audits of clients' solutions. It assesses the compliance of existing AI systems with all requirements (from GDPR to security standards) and highlights any gaps. The audit may also include an assessment of cybersecurity or ethical risks of algorithms. Clients gain a clear overview of their organization's legal compliance status and recommendations for specific improvements.

ARROWS helps implement ongoing control and monitoring mechanisms to keep AI tools safe and compliant throughout their use. This minimizes the risk of unexpected incidents, data leaks, or sanctions, allowing clients to focus fully on providing quality healthcare.

Effective dispute resolution and liability for harm caused by AI

If a dispute or damage arises in connection with the use of AI, ARROWS stands by its clients. We represent them in legal disputes and help resolve claims by patients or third parties for compensation for damage caused by an incorrect decision made by an AI system. ARROWS has experience in handling sensitive cases where technology has failed, from negotiating out-of-court settlements to litigation. ARROWS' strategy emphasizes protecting our clients' reputation and finances.

While there is often talk of the legal risks associated with artificial intelligence in healthcare, such as fines for GDPR violations or liability issues, it is important to recognize that AI is also a powerful tool for achieving and maintaining compliance. Research shows that AI systems can automate compliance checks, monitor regulatory changes, detect fraud, enhance data protection, and streamline reporting.

Examples include AI-driven verification of healthcare credentials, real-time record checks, and predictive analytics for risk mitigation. This dual nature of AI—as both a source of risk and a solution for compliance—enables ARROWS to offer services that not only mitigate potential problems but also help clients strategically leverage AI to achieve greater efficiency and cost savings. This transforms legal advice from mere “fine avoidance” to a partnership that delivers tangible operational and competitive advantage.

Who can you contact?

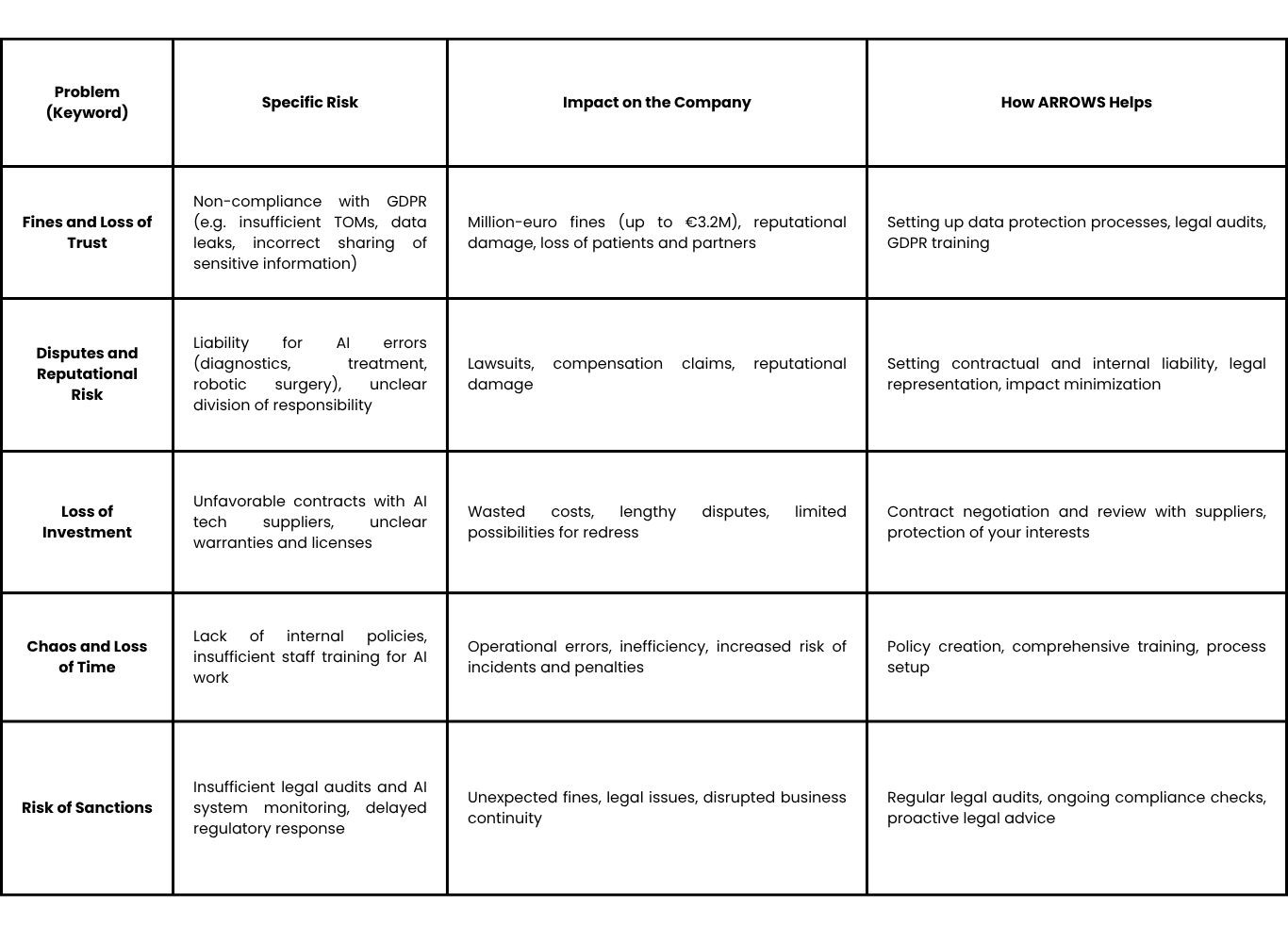

The 5 most common problems that ARROWS lawyers solve for clients in this area:

1. Million-dollar fines and loss of trust: Failure to comply with GDPR and other regulations for sensitive health data.

The healthcare sector is subject to strict rules for the protection of patients and data. Failure to comply with these aspects can lead to fines in the millions and damage to reputation. Fines for inadequate technical and organizational measures (TOMs), such as data transfer via metapixels or server vulnerabilities leading to ransomware attacks, are common. The average fine for TOMs has increased significantly in 2024, with cases such as €3.2 million for a Swedish pharmacy that unknowingly transferred customer data to Meta. Inadequate data security can lead to a loss of trust among patients and partners, which has long-term negative impacts on business.

2. Legal disputes and reputational risk: Liability for AI errors in diagnosis, treatment, or robotic surgery.

In practice, the question often arises as to who is responsible for a potential error made by artificial intelligence—for example, a misdiagnosis, inappropriate treatment recommendation, or surgical robot failure. Cases such as an AI system that missed a small tumor on a mammogram or the IBM Watson for Oncology system, which recommended dangerous treatment combinations and was discontinued in 2023, demonstrate the real risks. Determining liability is complicated because it may involve doctors, hospitals, and technology suppliers. AI errors can lead to legal disputes, claims for damages, and serious reputational risk. In addition, patients may assert claims for breach of informed consent.

3. Loss of investment and wasted costs: Poorly drafted contracts with AI technology suppliers.

Without clear contractual arrangements with AI system suppliers, companies run the risk of not having solid legal support in the event of problems. Issues such as warranties, service support, liability for defects, and data protection when transferring data to the technology provider may be unclear. Hospitals are in a good position to negotiate licensing agreements so that AI suppliers bear a fair share of responsibility.

Poorly negotiated contracts can lead to wasted costs, lost investments, and lengthy disputes that distract from the core business. An example is a US court case involving the use of an AI algorithm to determine coverage under Medicare Advantage plans, where claims for breach of contract were allowed.

Need help with this topic?

4. Chaos and wasted time: Lack of clear internal guidelines and insufficient staff training.

Staff training and clear internal rules are key to the successful and safe use of AI technologies in healthcare. Without them, processes can become chaotic, employees can feel uncertain, and regulatory requirements may not be adequately met. In 2017, the European Parliament emphasized the need for high-quality training for healthcare personnel in the use of robotic technologies. Inadequately trained staff and unclear guidelines increase the risk of errors, incidents, and inefficient use of expensive AI tools, leading to wasted time and potential penalties from regulatory authorities.

5. Risk of sanctions and loss of trust: Inadequate legal audit and ongoing monitoring of AI systems.

Many organizations lack robust processes for vetting AI tools prior to deployment and for ongoing monitoring of their compliance. This leads to undetected compliance gaps and potential risks. Without regular legal audits and ongoing control mechanisms, companies expose themselves to the risk of unexpected incidents, data leaks, or sanctions. This can seriously damage the reputation and trust of patients and business partners.

In the field of AI in healthcare, we often encounter a phenomenon where users, including doctors, tend to rely too much on AI tools because they often deliver excellent results. At the same time, however, doctors express concerns about legal liability for errors that AI tools could cause. This contradiction between excessive optimism (“techno-optimism”) and concerns about liability creates a critical vulnerability. The human factor, not AI alone, is becoming a significant source of risk. Inadequate internal guidelines, insufficient training, and insufficient human oversight are directly identified as causes of errors and compliance issues.

ARROWS services, particularly in the areas of internal guideline development and comprehensive training, directly address this issue at the human-AI interface. By focusing on the responsible use of AI and ensuring proper human oversight, ARROWS helps clients avoid the “techno-optimism trap,” building real trust between employees and patients and ensuring the ethical and safe deployment of AI.

Key legal risks of AI in healthcare and their impact on your business

Case studies and expert insights: How ARROWS protects your innovations and ensures peace of mind.

Case study 1: Ensuring GDPR compliance for AI diagnostics.

Imagine that your clinic is implementing an innovative AI system for diagnosing skin diseases that works with huge amounts of sensitive patient data. Without the right legal framework in place, you are exposing yourself to huge GDPR fines. ARROWS' lawyers helped the client with a comprehensive legal audit of data flows, review of patient consents, and the implementation of robust technical and organizational measures (TOMs).

Mechanisms for data minimization, pseudonymization, and encryption were implemented, and the system was ensured to meet transparency and data subject rights requirements. Thanks to ARROWS' proactive approach, the client avoided millions in fines, which are common in EU healthcare (e.g., a Swedish pharmacy was fined EUR 3.2 million for incorrect metapixel settings). The client strengthened patient and regulatory confidence, secured peace of mind, and was able to focus fully on providing top-quality healthcare.

Case study 2: Responsibility for an AI error in robotic surgery.

A hospital implemented a state-of-the-art AI-assisted surgical robot. During a complex procedure, an unexpected AI error occurred, resulting in damage to the patient's healthy tissue. A dispute arose over who was responsible—the hospital, the surgeon, or the robot manufacturer? ARROWS lawyers immediately launched a detailed investigation, which included an analysis of internal protocols, contracts with the supplier, and technical logs from the AI system. They drew on their in-depth knowledge of the complexities of determining liability in AI cases, where the responsibilities of the doctor, hospital, and technology supplier overlap.

Thanks to carefully crafted contractual arrangements with the supplier, which had been negotiated for the client in advance, we were able to minimize the financial impact on the hospital and effectively negotiate an out-of-court settlement with the injured patient. The hospital avoided a lengthy and costly lawsuit, protected its reputation, and gained peace of mind knowing that it has legal support to resolve such complex situations.

Need help with this topic?

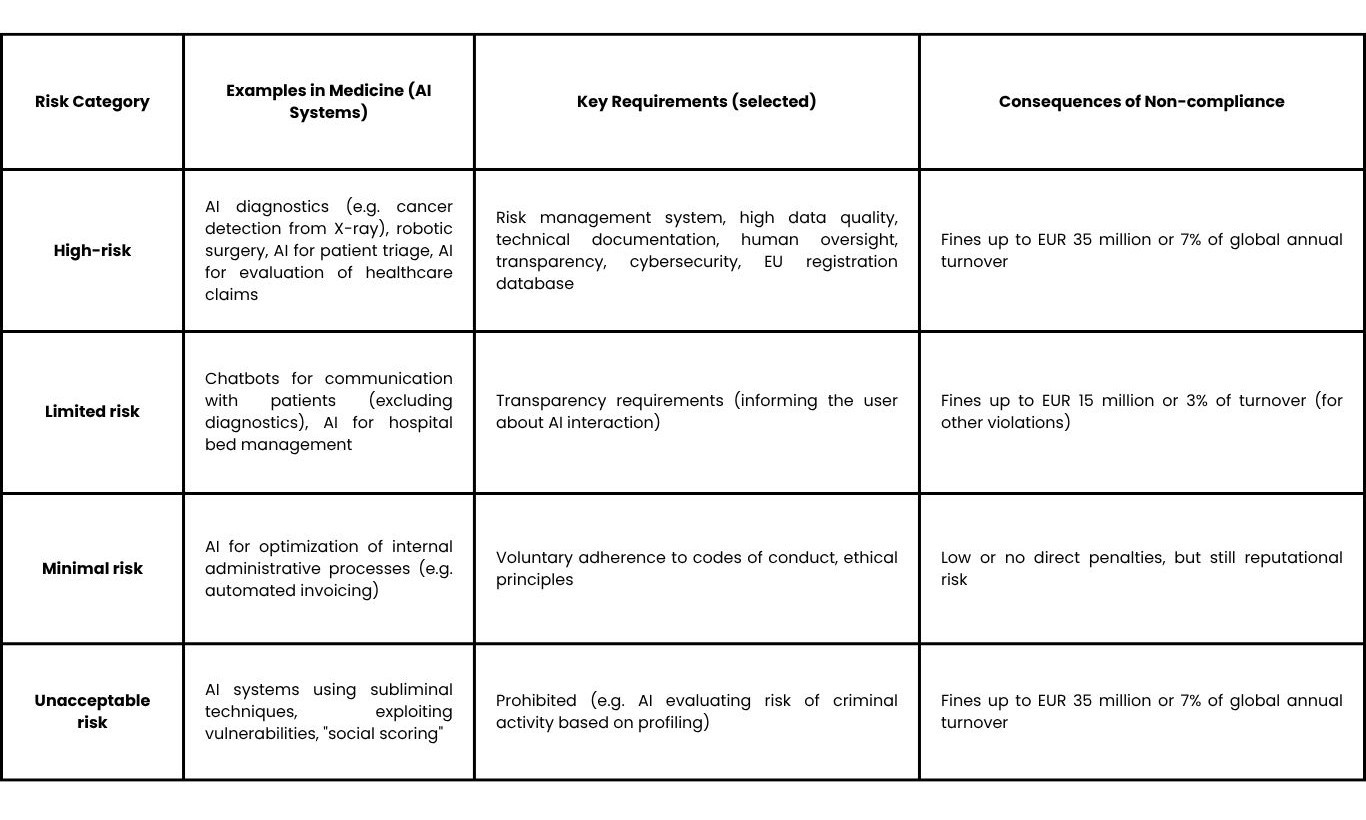

Expert insight: Navigating the EU AI Act – What it means for your healthcare AI systems.

The European Union has adopted a groundbreaking AI Act that will fundamentally affect the development and use of AI systems, especially those in healthcare. Many Czech companies have not yet had time to prepare for its complex requirements, which poses a significant risk and potential loss of time and fines. The AI Act classifies AI systems according to their level of risk. For healthcare, it is crucial that AI-based software requiring conformity assessment under the Medical Device Regulation (MDR) – i.e., most AI diagnostic and therapeutic tools (Class IIa, IIb, III) – is automatically considered “high risk.” This means strict requirements for:

- Risk management system: A continuous process throughout the AI system's lifetime.

- Data quality: High-quality data sets for training to minimize the risk of discrimination and inaccuracies.

- Technical documentation and records: Detailed information about the system, its purpose, the training process, and automatic recording of activities.

- Transparency and human oversight: The system must be sufficiently transparent for users to interpret its outputs and must allow for effective human oversight.

- Cybersecurity: Ensuring an adequate level of security and robustness.

Failure to comply with these requirements can result in huge fines – up to €35 million or 7% of total annual turnover. ARROWS lawyers regularly deal with these issues in practice and can help with reviewing your quality management system, preparing technical documentation, and conducting a single conformity assessment for the MDR and AI Act.

(photo: ARROWS law firm team)

International reach: ARROWS International in action – Our experience extends beyond borders.

Did you know that ARROWS lawyers have solved client problems in the field of AI regulation in healthcare both within and outside the EU? Whether it's implementing AI diagnostics in the Arab world or supplying robotic surgical systems to Latin American countries, ARROWS lawyers routinely deal with these issues in practice. Thanks to the ARROWS International network, which has been built up over ten years, legal services are also provided outside the Czech Republic. This provides peace of mind that innovation and expansion are not limited by borders and that there is a reliable partner for complex international legal issues in the field of AI and healthcare.

This demonstrates a higher level of expertise compared to firms limited to domestic or EU law. For the target B2B clientele, this means real global reach and an “insider” view of international trends and challenges, which builds considerable trust and ARROWS' position as a capable partner for clients' international interests and expansion plans.

EU AI Act: Risk classification and key requirements for healthcare AI systems

Why ARROWS is your ideal partner for AI in healthcare: Peace of mind, trust, and efficiency.

Long-term experience and proven portfolio

ARROWS Law Firm has experience in providing long-term services to clients. ARROWS' portfolio includes more than 150 joint-stock companies, 250 limited liability companies, and 51 municipalities and regions. ARROWS works primarily for entrepreneurs, which means a deep understanding of clients' business needs and goals. Experience from long-term cooperation with such an extensive and diversified client portfolio provides unique insight into the challenges and opportunities that companies face.

Modern approach and use of artificial intelligence

ARROWS is a modern company that uses artificial intelligence and modern methods for its clients. Not only does it help with the legal aspects of AI, but it also actively uses AI to optimize internal processes and provide more efficient services—for example, for contract analysis, legal research, or automation of compliance checks. This enables us to provide faster and more accurate advice, saving our clients time and money.

Who can you turn to?

Comprehensive services under one roof

Thanks to its partner companies (e.g., tax consulting, subsidy consulting), ARROWS provides comprehensive services under one roof. For clients, this means simplified administration and the certainty that all related aspects of their business – from legal to tax to subsidies – are handled in a coordinated and efficient manner.

Award-winning expertise

ARROWS is proud to have been named “Innovative Law Firm of the Year” in the field of artificial intelligence in 2024. This award is a testament to ARROWS' commitment to innovation and its ability to provide top-notch legal services in the dynamic AI environment.

The legal and regulatory environment for AI in healthcare is not static; it is constantly evolving, with new regulations and refinements to existing ones. ARROWS explicitly emphasizes its “modern approach,” its active “use of artificial intelligence,” and its prestigious “Innovative Law Firm of the Year 2024” award in the AI category. This combination of internal AI implementation and external recognition for innovation suggests that ARROWS is not only responding to current legal challenges but proactively anticipating and adapting to future ones.

This positions ARROWS as a partner that helps clients “secure the future” of their businesses. For B2B decision-makers, this offers a significant level of long-term security and peace of mind, knowing that their legal advisor is equipped not only to solve current problems but also to guide them through constant change.

Don't let the future catch you off guard – contact ARROWS today!

Don't expose your healthcare facility to unnecessary risks. At ARROWS, we understand the needs of modern hospitals, clinics, and laboratories, and we can connect innovation with legal certainty.

Contact ARROWS today – together, we will put measures in place to ensure that artificial intelligence moves you forward, not threatens you. Contact the experts at ARROWS to find out how they can help you turn the challenges of AI in your daily practice into a competitive advantage with maximum legal support. Your journey to secure and successful healthcare digitization begins with a consultation with ARROWS – ARROWS is ready to be your guide to the digital future of healthcare.

Read our articles:

- Nov 28, 2024 - Legislative Update on Health Law: Changes for non-medical professions and the development of computerisation of healthcare

- 19 Apr 2024 - The question of liability in the use of AI in healthcare

- 28 Mar 2024 - The contribution of artificial intelligence in healthcare

- 21. 3. 2024 - AI and personal data in 2024

- 9 Jan 2024 - We trained AI for the Czech Banking Association

- 2. 1. 2024 - Experience with the introduction of artificial intelligence in ARROWS, law firm

- 27. 11. 2023 - Exercise of copyright and property rights in relation to generative artificial intelligence

- 7 Sep 2023 - Navigating Corporate Compliance in the Age of Artificial Intelligence

- 10/7/2023 - Current State of AI Regulation in the World

- 13 Sep 2023 - ChatGPT Training

- 12 Jun 2023 - Deep Fake and Disinformation: How AI is affecting the spread of false information

- 5/25/2023 - ChatGPT Handbook

- 5/18/2023 - Statement by Jakub Dohnal for Forbes on AI Regulation

- 5/17/2023 - A Breakthrough in AI Regulation

Don't want to deal with this problem on your own? More than 2,000 clients trust us, and we have been named Law Firm of the Year 2024. Take a look at our references HERE.

.png)

.png)

.png)